Overview

Updated at July 9th, 2024

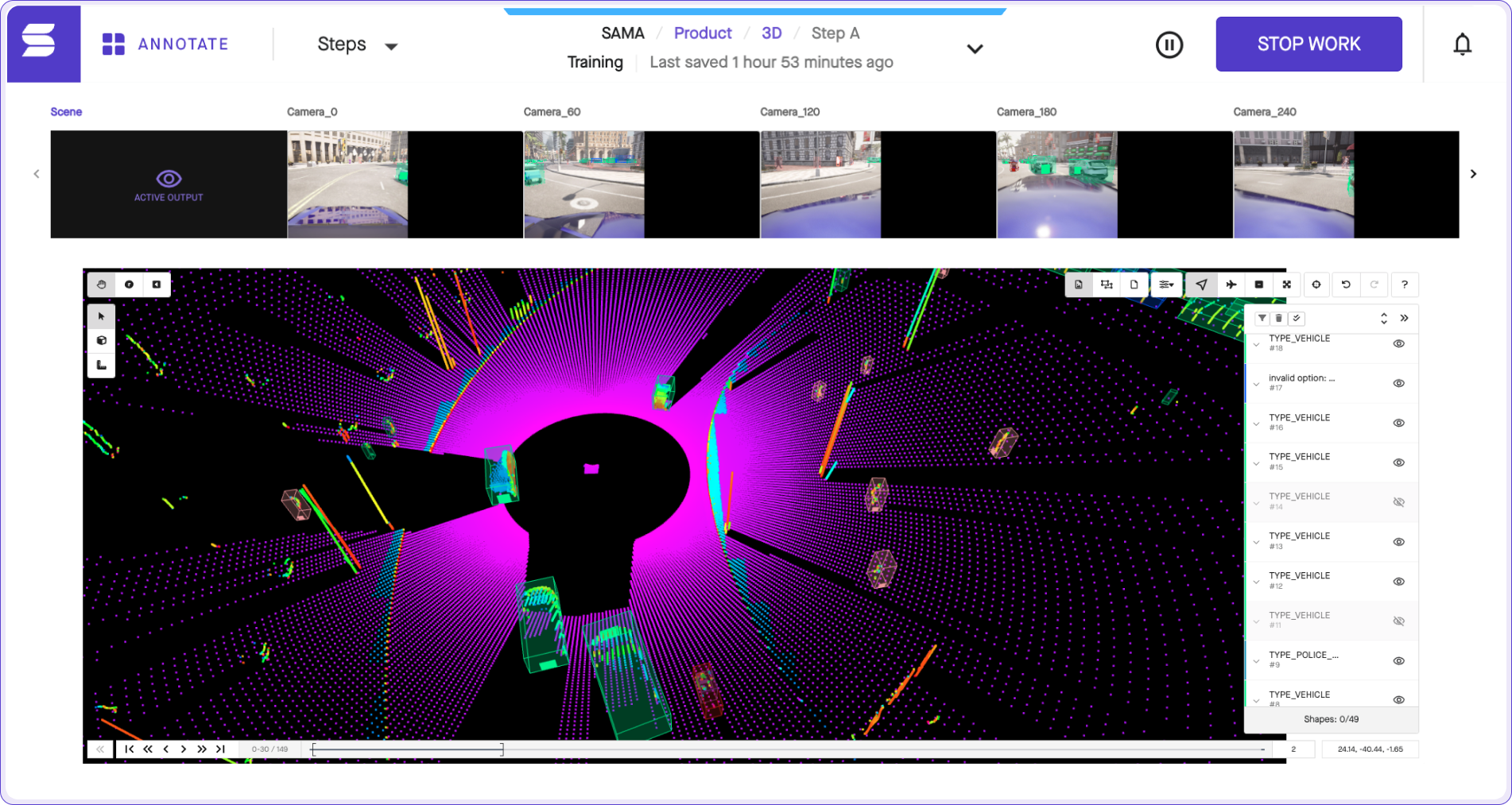

The Sama platform offers different features that empower users to extract meaningful insights from 3D sensor fusion data.

Assets That We Support

Single Frame

On the Sama platform, you can look closely at single frames. It's like checking out one picture at a time. You can study the details of that moment and see what's in it.

Continuous Frames

The platform also lets you see continuous frames. This is like watching a sequence of pictures, one after the other. It helps you understand how things move and change over time, like tracking the path of something or seeing how things behave as time goes on.

Single Point Cloud

Our platform supports single-point clouds. You can see the details of that single group of points, like a snapshot.

Multi Point Cloud

Our platform supports multi-point clouds. Think of it as watching many groups of points over time in a scene.

2D Cameras as reference only

The Sama platform supports 2D camera pictures on top of 3D sensor fusion scenes. By overlaying 2D camera data onto 3D sensor fusion scenes, users can enhance object recognition, improve tracking accuracy, and augment overall perception capabilities.

Annotation Types That We Support

To unlock the full potential of 3D sensor fusion data, our platform offers a wide range of annotation types that cater to various use cases.

3D Cuboid

Orthographic Shapes

Fused Annotation

Supported point cloud formats

Learn more about the Sama platform point cloud format and coordinate system

PCD

Point Cloud Data: Our platform is optimized for PCD files, we highly recommend using this format. It also supports various types of PCD formats, including ASCII, binary, and binary-compressed PCDs. It supports dimensions: x,y,z, and intensity.

Note: It doesn't support RGB you must provide intensity values.

Additional Types

Sama can support other data formats and custom ones as well. Please reach out to the Sama teams for assistance.

- Lidar Data Exchange Format (LAS)

- Polygon File Format (PLY)

- Pointools format (PTS)

- Text Files (TXT)

SPC

Sama Point Cloud: This is our proprietary point cloud data format. It's a format we developed to enhance the efficiency and performance of point cloud data. When you upload PCD (Point Cloud Data) to our platform, we automatically convert it into FDR, which is an optimized version for our system. This conversion ensures that your point cloud data is processed quickly and accurately, providing a seamless experience when working with 3D sensor fusion and annotation tasks on our platform.

📘 Note

Each data point should have (x,y,z) coordinates. Point intensity values are also supported (normalized to a 0-1 scale); values are encoded in the intensity dimension.

Preparing 3D sensor fusion data

In the following sections, we will walk you through the data required for sensor fusion workflow. Read More

In this section, we present camera intrinsics and extrinsics separately for better clarity. However, it's crucial to note that you'll need to combine both the extrinsics and intrinsics to upload them to the Dada platform.

Example

[

{

"x" : 0.0,

"y" : 0.0,

"z" : 0.0,

"rotation_x" : -0.136,

"rotation_y" : -0.005,

"rotation_z" : 0.991,

"rotation_w" : 0.001,

"f_x":3255.72,

"f_y":3255.72,

"c_x":1487.64,

"c_y":1014.38,

"k1":0.03016,

"k2":-0.27366,

"k3":0.00109,

"k4":-0.00192,

"p1":0.01,

"p2": 0.001

}

]Camera Image

Sama supports .mp4, .mov, .avi but recommends providing a .png or .jpg for each camera frame. The camera frame count should match that of the point cloud files and sync to the nearest timestamp.

Input file format

.zip files of camera frames

front_camera.zip

0001.jpg

0002.jpg

0003.jpg

back_camera.zip

0001.jpg

0002.jpg

0003.jpgIn this example, the front camera frames are stored in the "front_camera.zip" file, with each frame named according to a numerical sequence (e.g., 0001.jpg, 0002.jpg, etc.). Similarly, the back camera frames are organized in the "back_camera.zip" file using the same naming convention.

📘 Note

It is recommended to provide the timestamp in the frame filename, otherwise, ensure the frames have an alphanumeric naming convention that is easily sortable in the correct order (e.g., 0001.jpeg, 0002.jpeg, 0010.jpeg, 0100.jpeg, etc.).

Camera Image Compatibility

Our platform supports various video file formats such as .mp4, .mov, and .avi for convenience. However, to ensure optimal compatibility and recommended performance, we strongly advise providing individual .png or .jpg files for each camera frame. This approach guarantees a seamless integration of the camera images with the corresponding point cloud data, enabling accurate synchronization and alignment.

PointCloud Data

Provide a .zip(or folder) of point cloud frames for a single task

point_cloud.zip

0001.pcd

0002.pcd

0003.pcd📘 Note

To ensure accurate alignment between camera images and point cloud data, it is crucial to synchronize the camera frames with the nearest timestamp in the point cloud files. This synchronization allows for precise temporal correlation, facilitating the fusion of visual information and 3D spatial data.

Camera Intrinsic

Camera calibration data plays a vital role in the platform, enabling the accurate projection of 3D annotations onto 2D images. By providing comprehensive calibration information, users can benefit from precise alignment and visual representations of annotations within the camera view. This ensures a more intuitive and effective annotation experience.

You need to provide the following information:

-

Focal Length: Expressed in pixels, the focal length represents the distance between the camera lens and the image sensor. It is typically provided as two values, f_x and f_y, corresponding to the focal lengths in the horizontal and vertical directions, respectively.

-

Principle Point: Also specified in pixels, the principle point denotes the image coordinate that corresponds to the optical center of the camera. It is represented by the values c_x and c_y, indicating the offsets in the horizontal and vertical directions from the image's top-left corner.

- Distortion (Optional): Sama platform supports distortion parameters, including k1, k2, k3, k4, p1, and p2, which account for lens distortions. These parameters correct for image deformations caused by lens imperfections and are used to improve the accuracy of annotation projections. Please note that upcoming support is planned for distortion parameters.

JSON

{

"f_x":3255.72,

"f_y":3255.72,

"c_x":1487.64,

"c_y":1014.38,

"k1":0.03016,

"k2":-0.27366,

"k3":0.00109,

"k4":-0.00192,

"p1":0.01,

"p2": 0.001

}CSV

f_x, f_y, c_x, c_y, k1, k2, k3, k4, p1, p2

3255.72, 3255.72, 1487.64, 1014.38, 0.03016, -0.27366, 0.00109, -0.00192, 0.01, 0.001📘 Note

You can provide a single calibration data (shown in front_camera) which would be applied to each frame, or you can provide multiple calibration data (shown in back_camera) to be applied to each frame.

Distortion

📘Note

The Sama platform only supports one type of distortion which is the Kannala Brandt model. Otherwise, you need to provide un-distort images.

If you are using the Kannala Brandt model provide your data as follows:

JSON

{

<other fields, x,y,z, rotation_*>,

"distortion_model": "kannala_brandt",

"k1": 1,

k1, k2, k3, k4,

p1, p2, ..., p4

}CSV

<other fields, x,y,z, rotation_*>,distortion_model,k1,...

<values for other fields>,kannala_brandt,1,.Camera Extrinsic

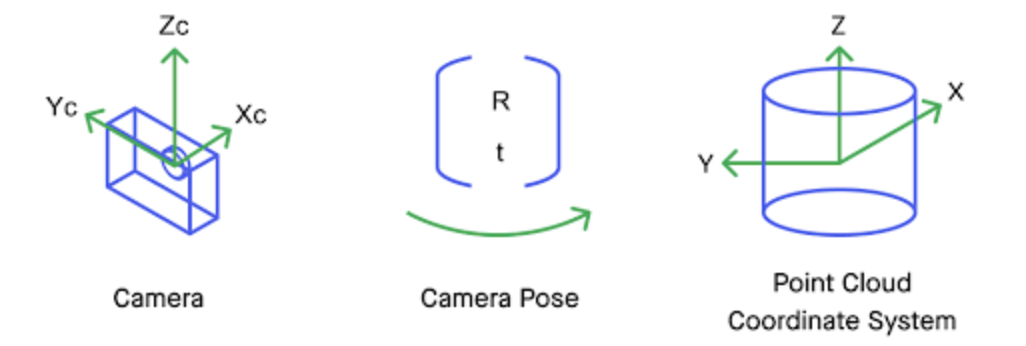

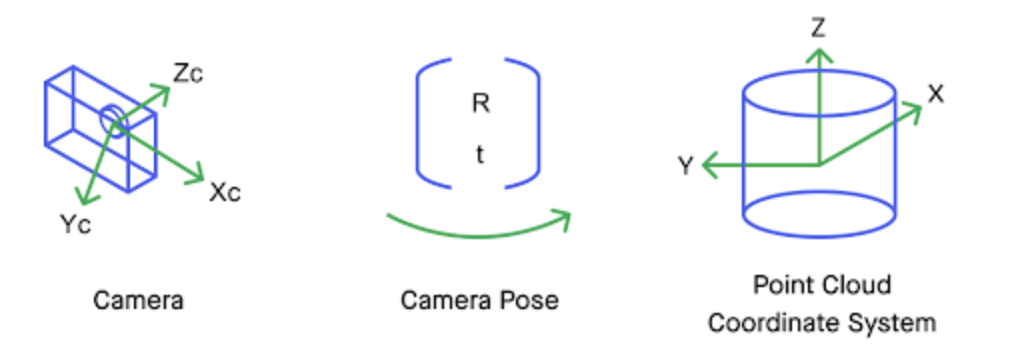

Camera pose in the point cloud’s coordinate system

The camera's pose, expressed as inverse extrinsic parameters, defines its position and orientation in the point cloud's coordinate system. The pose data consists of:

-

Position: Represented by the coordinates (x, y, z), the camera's position indicates its location in the point cloud coordinate system. It defines the translation vector from the point cloud's origin to the camera's optical center.

- Rotation: Described by quaternion values (rotation_x, rotation_y, rotation_z, rotation_w), the camera's rotation represents its orientation relative to the point cloud's coordinate system. Quaternion values ensure accurate and efficient representation of rotation, facilitating precise alignment of the camera's view with the point cloud data.

Front Camera

[

{

"x" : 0.0,

"y" : 0.0,

"z" : 0.0,

"rotation_x" : 0.0,

"rotation_y" : 0.131,

"rotation_z" : 0.00,

"rotation_w" : 0.991,

"f_x" : 1316.358613,

"f_y" : 1316.358613,

"c_x" : 760,

"c_y" : 701

}

]

Back Camera

[

{

"x" : 0.0,

"y" : 0.0,

"z" : 0.0,

"rotation_x" : -0.136,

"rotation_y" : -0.005,

"rotation_z" : 0.991,

"rotation_w" : 0.001,

"f_x" : 1316.358613,

"f_y" : 1316.358613,

"c_x" : 760,

"c_y" : 701

}

]

By default, Sama’s convention is that the origin of the camera’s coordinate system is at its optical center, the x-axis points down the lens barrel out of the lens, the z-axis points up, and the y-axis points left.

We also support other conventions, for example, where the origin of the camera’s coordinate system is at its optical center, the z-axis points down the lens barrel out of the lens, the x-axis points right, and the y-axis points down.

⚠️ Warning

Please inform the Sama team of your camera’s coordinate system convention.

Coordinate System

By default, Sama follows a specific coordinate system convention for camera calibration. In this convention:

- The origin of the camera's coordinate system is located at its optical center.

- The x-axis points down the lens barrel, extending out of the lens.

- The z-axis points upward.

- The y-axis points left.

Alternative Coordinate System Conventions

Sama also supports other coordinate system conventions to accommodate various camera setups. For instance, an alternative convention might involve:

- The origin of the camera's coordinate system at its optical center.

- The z-axis pointing down the lens barrel, extending out of the lens.

- The x-axis pointing to the right.

- The y-axis pointing downward.

To ensure proper calibration and alignment, it is important to inform the ADA team of the specific coordinate system convention used by your cameras.

The positive z-direction must represent "up" in the physical world, positive y must represent "left", and positive x must represent "forward" (RHR). Sama uses the roll-pitch-yaw XYZ Euler angle convention where the roll is around the x-axis, the pitch is around the y-axis, and the yaw is around the z-axis.

Whether the point cloud coordinate system origin is a fixed point on the ego vehicle or a fixed point in world coordinates, any pre-annotations, camera calibration or pose data must be given in that same coordinate system. Delivered cuboid and other shape annotations will also be in that same coordinate system.

Fixed-world

Fixed-world refers to a 3D environment that is anchored to the world, rather than a 3D environment that is relative to a moving object like the ego vehicle. Annotating in a fixed-world environment can be much faster and result in better quality, especially with static objects, as they mostly stay in place as the scene progresses.

Pose data

The data required is the point cloud sensor's pose in the world coordinate system, or the vehicle's pose in the world coordinate system synced to the point cloud frames:

- Position [x,y,z]

- Rotation [rotation_x,rotation_y,rotation_z,rotation_w]

File format

.csv or .json describing the pose of each frame

Example:

| csv |

|

| JSON |

|

toolkit

Output data JSON format

Explore more of the Sama JSON output format. Get a better understanding of how every annotation is delivered in this file.

How to navigate the interface when reviewing data

|

Reviewing tasks |

Status of tasks |

Task read-only mode |

Exporting tasks |

Monitoring annotation progress |

| Review the quality of a batch. All Sama projects are held to a quality SLA. | Search a task and view the id, creation date, submitted date, and state | You can view any task you like in the workspace in read-only mode | You can export tasks at any time | Check the different states of a task |

How to navigate the Sama platform

|

Display Shapes |

View Settings |

Cameras and Ground View |

Enhanced Point Cloud |

Other Tools |

|

|

|

|

|

| Adjust the image and shapes for a better perception while you work. | Personalize how shapes are displayed in the workspace | Adjust the camera's view as well as the ground and route for each annotation | View every single frame on a task | Learn more about mouse coordinates, shape panel, thumbnail, and timelines |

Importing pre-annotations

Leveraging pre-annotated data offers numerous advantages in various scenarios, providing valuable assistance and improving the annotation process. Read more

Consider the following situations where creating tasks from pre-annotated data can be particularly beneficial:

|

Model Validation Workflows |

When validating machine learning models, pre-annotated data serves as a valuable resource. Models can generate initial annotations that aid in accelerating the annotation process. By creating tasks from pre-annotated data, annotators can focus on refining and validating the generated annotations, ensuring the accuracy and quality of the model's outputs. |

|

Accelerating Annotation with Model Pre-Annotations |

If you have a model that offers pre-annotations, utilizing these initial annotations can significantly expedite the overall annotation process. Creating tasks from pre-annotated data allows human annotators to validate and refine the model's suggestions, reducing the time and effort required for manual annotation. |

|

Rectifying Mistakes in Previously Delivered Tasks |

In cases where previously delivered tasks contain errors or inaccuracies, creating new tasks from the pre-annotated data provides an opportunity for corrections. By uploading the existing tasks to a new project, annotators can review and rectify any mistakes, ensuring the final annotations are accurate and high-quality. |

|

Updating Previous Projects with Additional Annotations |

When there is a need to supplement a previous project with additional annotations, utilizing pre-annotated data simplifies the process. By creating new tasks based on the existing annotations, annotators can seamlessly add new information, expanding the dataset and enhancing the project's comprehensiveness. |